Meta could face further squeeze on surveillance ads model in EU | TechCrunch

Meta’s tracking ads business could be facing further legal blows in the European Union: An influential advisor to the bloc’s top court affirmed Thursday that the region’s privacy laws limits on how long people’s data can be used for targeted advertising.

In the non-legally binding opinion, Advocate General Athanasios Rantos said use of personal data for advertising must be limited.

This is important because Meta’s tracking ads business relies upon ingesting vast amounts of personal data to build profiles of individuals to target them with advertising messages. Any limits on how it can use personal data could limit its ability to profit off of people’s attention.

A final ruling on the point remains pending — typically these arrive three to six months after an AG opinion — but the Court of Justice of the EU (CJEU) often takes a similar view to its advisors.

The CJEU’s role, meanwhile, is to clarify the application of EU law so its rulings are keenly watched as they steer how lower courts and regulators uphold the law.

Proportionality in the frame

Per AG Rantos, data retention for ads must take account of the principle of proportionality, a general principle of EU law that also applies to the bloc’s privacy framework, the General Data Protection Regulation (GDPR) — such as when determining a lawful basis for processing. A key requirement of the regulation is to have a legal basis for handling people’s information.

In a press release the CJEU writes with emphasis: “Rantos proposes that the Court should rule that the GDPR precludes the processing of personal data for the purposes of targeted advertising without restriction as to time. The national court must assess, based inter alia on the principle of proportionality, the extent to which the data retention period and the amount of data processed are justified having regard to the legitimate aim of processing those data for the purposes of personalised advertising.”

The CJEU is considering two legal questions referred to it by a court in Austria. These relate to a privacy challenge, dating back to 2020, brought against Meta’s adtech business by Max Schrems, a lawyer and privacy campaigner. Schrems is well known in Europe as he’s already racked up multiple privacy wins against Meta — which have led to penalties that have cost the tech giant well over a billion dollars in fines since the GDPR came into force.

An internal memo by Meta engineers, obtained by Motherboard/Vice back in 2022, painted a picture of a company unable to apply policies to limit its use of people’s data after ingestion by its ads systems as it had “built a system with open borders”, as the document put it. Although Meta disputed the characterization, claiming at the time the document “does not describe our extensive processes and controls to comply with privacy regulations”.

But it’s clear Meta’s core business model relies on its ability to track and profile web users to operate its microtargeted advertising business. So any hard legal limits on its ability to process and retain people’s data could have big implications for its profitability. To wit: Last year, Meta suggested around 10% of its worldwide ad revenue is generated in the EU.

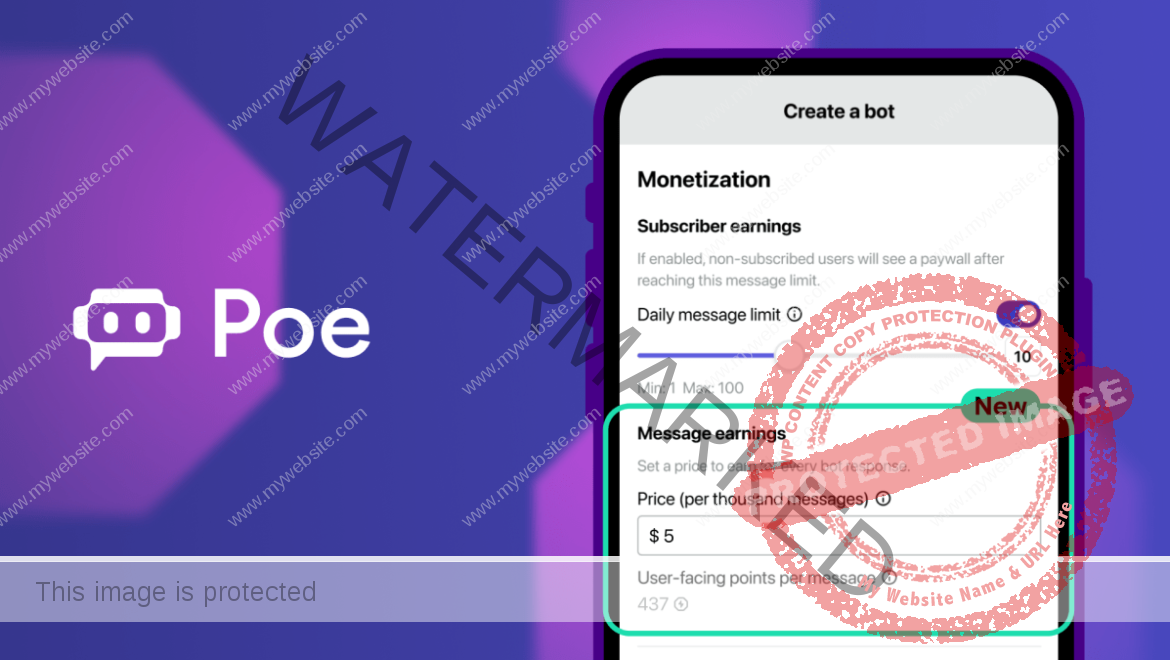

In recent months, European Union lawmakers and regulators have also notably been dialling up pressure on the adtech giant to ditch its addiction to surveillance advertising — with the Commission explicitly name-checking the existence of alternative ad models, such as contextual advertising, when it opened an investigation into Meta’s binary “consent or pay” user offer last month, under the market power-focused Digital Markets Act.

A key GDPR steering body, meanwhile, also put out guidance on “consent or pay” earlier this month — stressing that larger ad platforms like Meta must give users a “real choice” about decisions affecting their privacy.

No sensitive data free-for-all for ads

In today’s opinion, AG Rantos has also opined on a second point that’s been referred to the court: Namely whether making “manifestly” public certain personal information — in this case, info related to Schrems’ sexual orientation — gives Meta carte blanche to retrospectively claim it can use the sensitive data for ad targeting.

Schrems had complained he received ads on Facebook targeting his sexuality. He subsequently discussed his sexuality publicly but had argued the GDPR principle of purpose limitation must be applied in parallel, referencing a core plank of the regulation that limits further processing of personal data (i.e. without a new valid legal basis such as obtaining the user’s consent).

AG Rantos’ opinion appears to align with Schrems’. Discussing this point, the press release notes (again with emphasis): “while data concerning sexual orientation fall into the category of data that enjoy particular protection and the processing of which is prohibited, that prohibition does not apply when the data are manifestly made public by the data subject. Nevertheless, this position does not in itself permit the processing of those data for the purposes of personalised advertising.”

In an initial reaction to the AG’s views on both legal questions, Schrems, who is founder and chairman of the European privacy rights nonprofit, noyb, welcomed the opinion, via his lawyer for the case against Meta, Katharina Raabe-Stuppnig.

“At the moment, the online advertising industry simply stores everything forever. The law is clear that the processing must stop after a few days or weeks. For Meta, this would mean that a large part of the information they have collected over the last decade would become taboo for advertising,” she wrote in a statement highlighting the importance of limits on data retention for ads.

“Meta has basically been building a huge data pool on users for 20 years now, and it is growing every day. EU law, however, requires ‘data minimisation’. If the Court follows the opinion, only a small part of this pool will be allowed to be used for advertising — even if have consented to ads,” she added.

On the issue of further use of sensitive data that’s been made public, she said: “This issue is highly relevant for anyone who makes a public statement. Do you retroactively waive your right to privacy for even totally unrelated information, or can only the statement itself be used for the purpose intended by the speaker? If the Court interprets this as a general ‘waiver’ of your rights, it would chill any online speech on Instagram, Facebook or Twitter.”

Reached for its own reaction to the AG opinion, Meta spokesman Matthew Pollard told TechCrunch it would await the court ruling.

The company also claims to have “overhauled privacy” since 2019, suggesting it’s spent €5BN+ on EU-related privacy compliance issues and expanding user controls. “Since 2019, we have overhauled privacy at Meta and invested over five billion Euros to embed privacy at the heart of our products,” wrote Meta in an emailed statement. “Everyone using Facebook has access to a wide range of settings and tools that allow people to manage how we use their information.”

On sensitive data, Pollard highlighted another claim by Meta that it “does not use sensitive data that users provide us to personalise ads”, as the statement puts it.

“We also prohibit advertisers from sharing sensitive information in our terms and we filter out any potentially sensitive information that we’re able to detect,” Meta also wrote, adding: “Further, we’ve taken steps to remove any advertiser targeting options based on topics perceived by users to be sensitive.”

In April 2021, Meta announced a policy change in this area — saying it would no longer allow advertisers to target users with ads based on sensitive categories such as their sexual orientation, race, political beliefs or religion. However, in May 2022, an investigation by the data journalism nonprofit, The Markup, found it was easy for advertisers to circumvent Meta’s ban by using “obvious proxies”.

A CJEU ruling back in August 2022 also looks very relevant here as the court affirmed then that sensitive inferences should be treated as sensitive personal data under the GDPR. Or, put another way, using a proxy for sexual orientation to target ads requires obtaining the same stringent standard of “explicit consent” as directly targeting ads at a person’s sexual orientation would need in order to be lawful processing in the EU.